Robotic Infrastructure Inspection

Motivation

Modern day infrastructure inspection is commonly done using human visual inspections. Human inspectors have a difficult time detecting and measuring quantity or size of defects on a large scale. This problem is often atttributed to lack of large scale measurement tools that can be used to quantify objects of interest rapidly.

Other problems with manual inspection include:

- Subjectivity

- Accessibility of elements

- Inspector fatigue

- Inconsistency among inspectors

E.g., Bridge Inspections: Bridge inspections in Ontario (similar to all Canada and the USA), are performed on a bi-annual basis or more frequently as warranted by the condition of a particular bridge. Inspections are performed by human inspectors that visually look for defects of interest including cracks, concrete spalls, concrete delaminations, corrosion, etc. The size and quantity of these defects are estimated and recorded. These subjective quantity estimates are combined into an overall condition rating of the bridge or element. The precise change in defect size and its affect on the structure’s integrety is not explicitly calculated. Furthermore, to achieve a reasonable degree of accuracy with respect to defect detection and quantification, inspectors are expected to perform the inspections from no more than an arm’s length away from the elements being assessed. This requirement is not always adhered to as a result of accessibility issues combined with lack of inspection funds or potential traffic disruption (see Fig. 1 and 2).

Figure 1: Bridge Master being used for inspection on the Conestogo River Bridge. Figure 2: Inspection of the Gardiner Expressway bridge.

This research work aims to use mobile robotics to help suppliment or replace human visual inspection of infrastructure. We primarily focus on inspection of the following types of infrastructure:

- Bridges

- Parking Structures

- Nuclear Facilities

- General Industrial Plants

The benefits of using robotics for inspection include:

- Repeatability

- Increased accuracy and detail

- Elimination of user subjectivity

- Increase accessibility

We are studying the use of unmanned ground vehicles (UGVs), unmanned aerial vehicles (UAVs) and unammed surface vessels (USVs). The focus of our work in on the sensor package, along with all software required to perform such inspections. The goal of this work is to have a sensor and software suite that can be used on any robotic platform that best suits the application

Our core contribution is the combination of 3D lidar maps with images and image analysis (using AI or standard image processing) for fully automated defect detection and quantification. In our work, defects are located in the images and quantified/tracked using the point cloud map.

Robots

Three prototype inspection robots have been developed for the purpose of infrastructure inspection research. All sensors are time synchronized and calibrated intrinsically for internal parameter estimation, and extrinsically for estimates of the physical transformation between each sensor frame.

Husky UGV (Inspector-Gadget)

Figure 3 and 4: Husky based inspection UGV with sensors including (A) Vertical Lidar (VLP-16 HiRes) (B) Horizontal Lidar (VLP-16 Lite) (C) Swift Nav Piksi-Multi RTK GPS (D) 3 Flir BlackflyS machine vision cameras with narrow FOV and Fisheye lenses (E) Flir ADK Infrared Spectrum Camera (F) Inertial Measurement Unit (UM7) (G) Custom PCBs for data i/o and hardware triggering

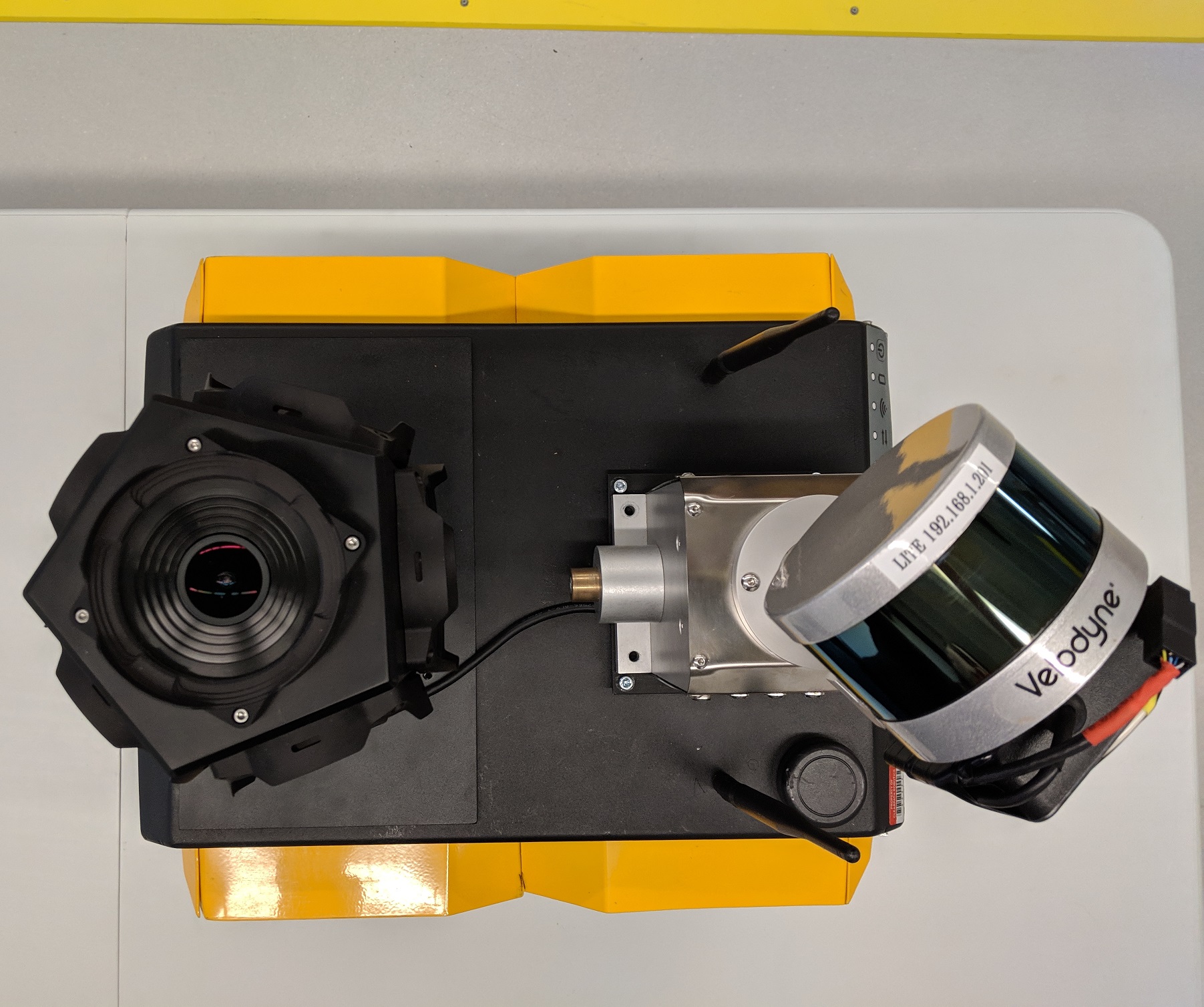

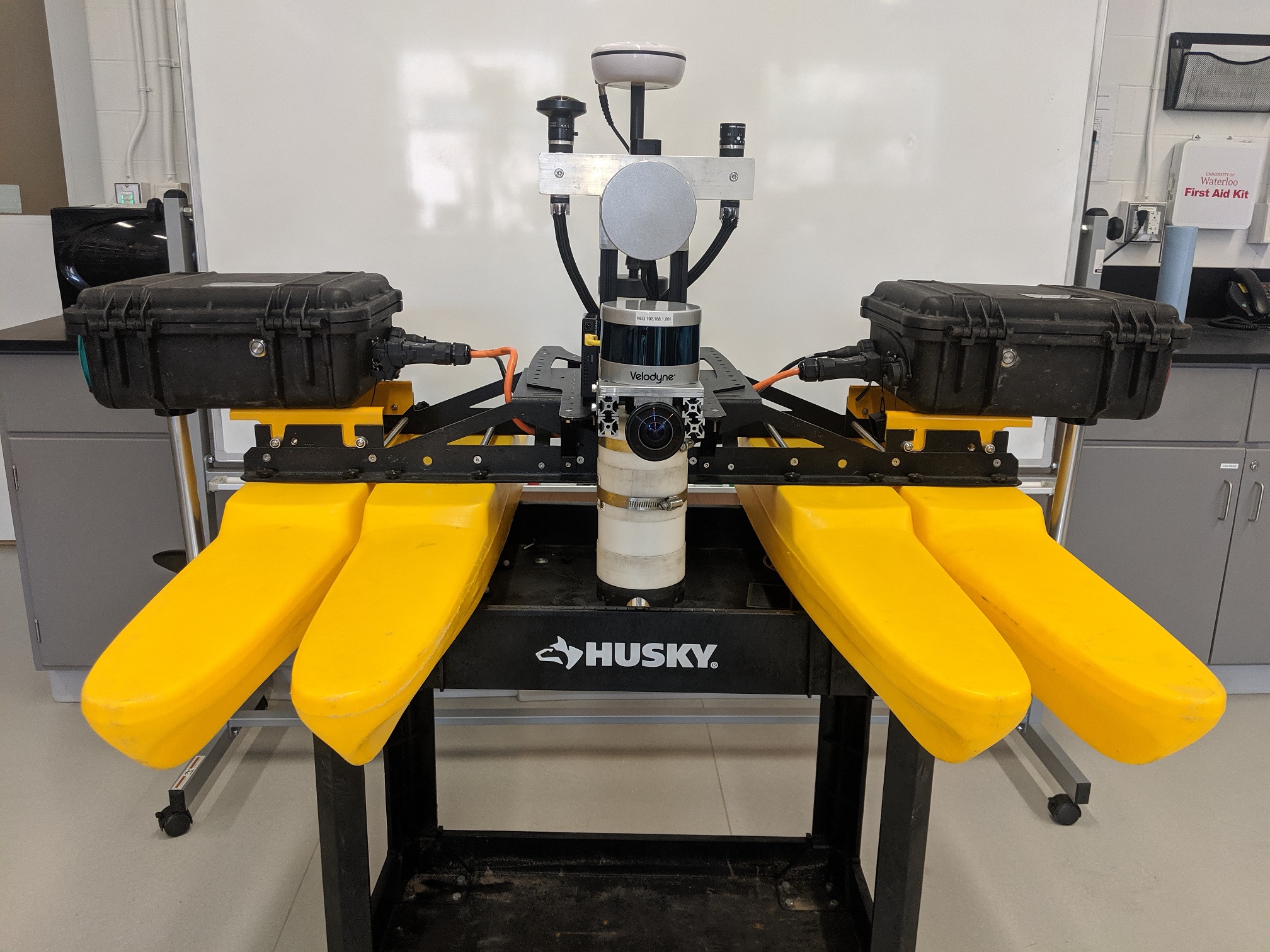

Jackal UGV (RobEn)

Figure 5 and 6: Jackal based inspection UGV with sensors including (A) Rotating Lidar (VLP-16) (B) Flir Ladybug5+ (C) GPS (D) IMU

King Fisher USV

The above robotic platforms are specifically designed for ground/surface based infrastructure inspection. All sensors are time synchronized and calibrated intrinsically for internal parameter estimation, and extrinsically for estimates of the physical transformation between each sensor frame.

Mapping Results

The mapping procedure currently works in two separate processes. First, an online SLAM solution is implemented to do real-time, lower quality mapping. This allows the operator to view the map as it is being generated so that they can ensure full coverage of the area of interest. After data collection has completed, the map is automatically refined using a batch optimization approach implemented with GTSAM. Online mapping is currently performed using an EKF, or LOAM (Lidar Odometry and Mapping). We are currently working on a fixed-lag smoother implementation for better online mapping.

Full Batch SLAM

Full batch SLAM results from RobEn in the UW structures lab, including colour from Ladybug camera.

Full batch SLAM results from Inspector Gadget on a parking garage in Kitchener, ON.

Online Mapping

Online Mapping of the Conestogo River bridge in Waterloo, ON. Inspector Gadget

Online Mapping of the Fairway Road bridge in Kitchener, ON. with Inspector Gadget

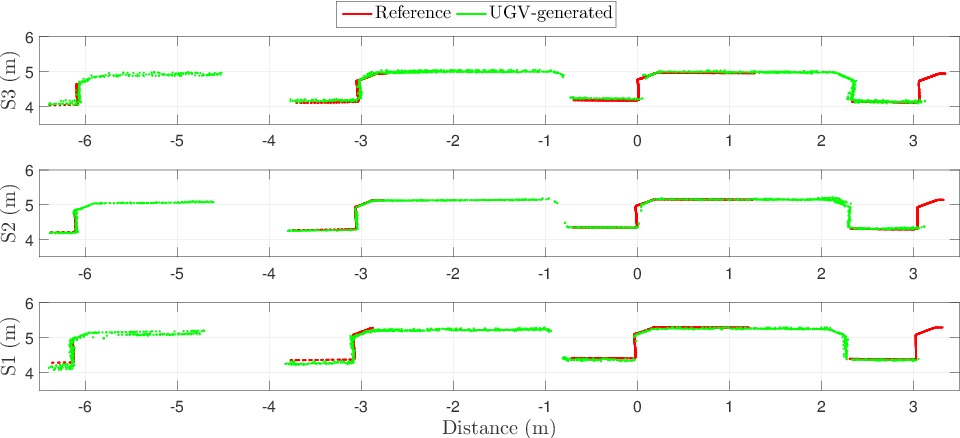

Figure 6 shows the results from the Conestogo Bridge mapping when compared to a baseline scan using a Faro Focus . Here, we take cross-sections of the maps generated by our platform (green) and compare to cross sections from the Faro scanner (Red) at the same locations on the bridge. This illustrates the accuracy capabilities of this preliminary mapping software.

It should also be noted that the time to generate this map with our platform is on the order of 5 to 10 minutes whereas the time required to scan the same area with the Faro took us approximately two hours.

Figure 9: Scan results of out platform compared to Faro Focus on the Conestogo River Bridge.

Inspection Results

The core contribution of our work is the extension of 3D mapping to perform automated inspection. To do this, we collect RGB and IR images that are synchronized with the lidar scans. Based on our pose estimates from SLAM and the extrinsic calibrations, we can perform image analysis on to detect defect/objects of interest and project that information to the point cloud.

Our point clouds contain not only x,y,z,r,g,b,i (thermal intensity) information, but each point also contains a probability of the point belonging to a specific class. Classes for bridge inspection for example are: (i) sound concrete, (ii) crack, (iii) delamination, (iv) spall, and (v) patch. We then group the points belonging to each class into defect objects in order to calculate and track dimensions of each defect.

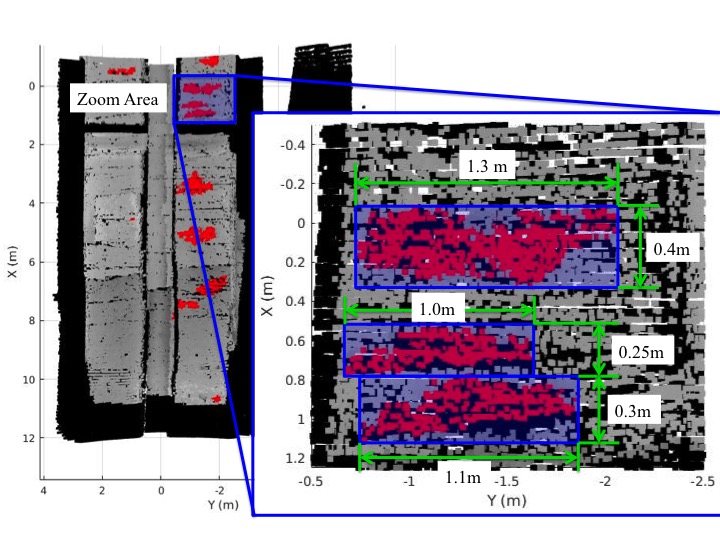

Case Study: Conestogo River Bridge Delamination Detection

An example use case of this technology was the delamination survey of the Conestogo River Bridge. A delamination is a patch of concrete that has detached from the rest of the structure but has not fallen off. Delaminations introduce air voids which act as insulating layers that alter heat travel through the structure which can be seen in infrared images. In this example, we use image processing tools to detect the delaminations in the infrared images (Fig. 10). The images are labeled on a pixel-wise basis and these “delaminated” pixels are projected onto the point cloud using ray-tracing. Since the point cloud is to-scale, we can then directly measure the size of the defects (Fig. 11).

Figure 11: Conestogo River bridge coloured with the infrared images and delamination spots coloured in red.

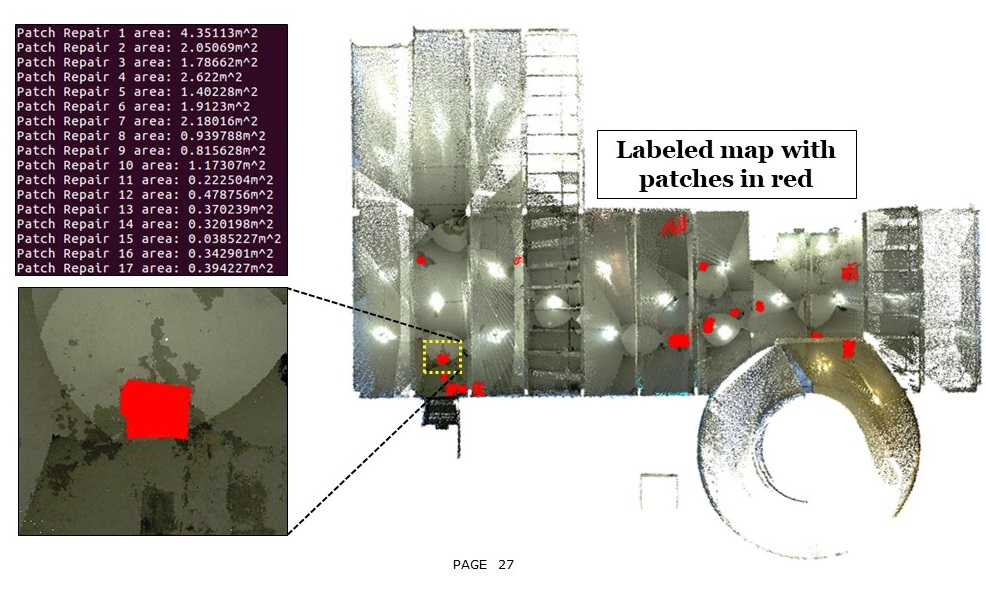

Case Study: Parking Garage

Another example use case for this technology is concrete patch detection and quantification. For this project, we scanned a parking garage in Kitchener, ON. The RGB images from this scan were used to detect concrete patches which were then projected to the point cloud map and coloured in red (Fig. 12). In this case, we also clustered together the points associate with each patch and calculated the area of each patch. We were then able to produce an inspection report with all patches and their size. This whole inspection process was automated (besides the data collection where the robot was driven manually).

Inspection results of a parking garage in Kitchener, ON., showing the detected patches and a list of their measured sizes.

Video fly-through showing the mapping and inspection results of a parking garage in Kitchener, ON.

Autonomy

Automating data collection for inspection is another area of focus of this work. We have worked on two different GPS waypoint navigation packages in ROS that can be used to automate data collection. Both packages were created in collaboration with Clearpath Robotics.

Open Source Waypoint Navigation

The first implementation was stictly using open-source ROS packages with minimal custom nodes. The waypoint navigation package was built to work with Clearpath robots having a GPS, IMU, 2D front facing Lidar, and wheel odometry. It allows a user to either input the GPS waypoints, or collect the waypoints with the robot ahead of time. Obstacle avoidance is also fully incorporated into the software. Full tutorials can be found here, and the source code can be found here.

Video demonstrations of this package are shown below. The video on the left shows standard waypoint navigation with obstacle avoidance. The video on the right shows how it can be combined with a mapping kit for autonomous mapping.

GPS waypoint navigation results, with obstacle avoidance.

Autonomous 3D mapping with GPS waypoint navigation and Mandala-Mapping kit.

Proprietary Waypoint Navigation

Further development was performed with Clearpath Robotics to release a more reliable and user-friendly package. For information on purchasing this package, see Clearpath’s website. Video demonstations are shown below.

Publications

- Charron, N., McLaughlin, E., Phillips, S., Goorts, K. Narasimhan, S., and Waslander, S. L. (2019). Automated bridge inspection using mobile ground robotics. Journal of Structural Engineering, ASCE, vol. 145, no. 11.

- Phillips, S. Narasimhan, S. (2019). Automating data collection for robotic bridge inspections. Journal of Bridge Engineering, ASCE, vol. 24, no. 8.

- Phillips, S., Charron, N, McLaughlin, E. and Narasimhan, S. (2019). Infrastructure Mapping and Inspection using Mobile Ground Robotics. In Proceedings of: 12th International Workshop on Structural Health Monitoring (IWSHM 2019)., Stanford, CA., USA, Sep. 10-12.

- McLaughlin, E., Charron, N. and Narasimhan, S. (2019). Combining Deep Learning and Robotics for Automated Concrete Delamination Assessment. In Proceedings of 36th International Symposium on Automation and Robotics in Construction (ISARC), Banff, Alberta, May 22 – 24.

Students